Getting People to Lump or Split Themselves: Pooling vs Separation

I. Lumping & Splitting vs Pooling & Separating

My goal in this short Essay is to show how an analytic vocabulary first developed to analyze insurance markets by Michael Rothschild and Joseph Stiglitz (some parallel ideas were developed by Michael Spence at roughly the same time) can shed light on a range of institutional design questions, from crime to contract damages. What forces lead people to pool together into groups that conceal some aspect of their individual heterogeneity? When should we want them to? Conversely, when is individualization appropriate or even necessary, and when is it pernicious? These are fundamentally questions of “lumping and splitting,” applied not to things but to people. In economics at least, they are also fundamentally questions about information—who has it, who needs it, how it can be obtained, and at what cost.

At its simplest, pooling just means suppressing or eliding information about a population’s underlying heterogeneity along some dimension. Separation entails revealing such information. (My focus will largely be on problems of screening, which arise in contexts where the party who seeks to obtain private information makes the first move and the informed party responds. In signaling models such as those of Spence, by contrast, the informed party moves first and faces the task of credibly conveying their private information to someone else.) Take, for example, a group of conference attendees, some of whom are vegetarians and some omnivores. Offering people a choice of steak or tofu will (probably) separate the two groups; offering only tofu will pool them, at least to the extent that an outside observer cannot distinguish between the strict vegetarians and the meat-eaters based on their choice of entree. (Actually, only partial separation is guaranteed, since some omnivores (e.g., Orthodox Jews) might prefer tofu, even though no vegetarians choose steak. I return to this important caveat later. By contrast, offering only steak might also (partially) separate the groups. Given a choice between steak and nothing, many of the vegetarians might choose not to eat anything at all, thereby revealing their identities by their choice to abstain.)

Of course, the simplest way to get accurate information about what people want to eat is just to ask them, and that’s the method we typically use. Since everyone has a strong reason to honestly volunteer their food preferences so that they can be matched with the appropriate entree, we can rely on self-interest to induce separation in this context.

The more interesting issues occur when one or more types of individuals would prefer that their type not be made public, or have an affirmative reason to masquerade as someone of a different type. Consider a group of cars travelling down a highway. Some (small) fraction of the vehicles are carrying illegal drugs, and their drivers would obviously prefer to be pooled with the innocent travelers so as to avoid detection. The police, by contrast, would prefer to separate out this group; so would the innocent drivers, at least if such separation could be achieved at a modest cost. But unlike the conference attendees, not all of the drivers benefit from separation. In particular, the drug couriers have an obvious motive not to reveal their identities, and will certainly not answer honestly if asked. So—as we will see below—we will need some other way of separating the two groups.

In other instances, individuals may not even know their own type, so they can’t even respond to a direct question. For example, some people are extremely gullible and might actually be willing to send $1,000 to a Nigerian Prince who emails them that he will reward them handsomely if they do so. Fortunately for them, such marks are quite rare, and it is not easy to separate them from the general population who would very quickly reject any such offer. The only way to discover whether someone is gullible is to try to gull them and see what happens. As Cormac Herley demonstrates, this explains why Nigerian email scammers often choose to disclose upfront that they are from Nigeria. This information is an obvious indicator of an intent to defraud—at least to most people. But that turns out to be a feature, not a bug: the scammers are looking to screen out all but the most gullible at the earliest stages of their scam (when such screening is cheap), so that they can concentrate their (expensive) attention in the later stages of the scam on those who most likely to fall for it. Disclosing the senders’ Nigerian origins is actually a sensible way of filtering out all but the most credulous among their email recipients.

The issues here are related to the themes Lee Fennell takes up so brilliantly in her book. Like lumping and splitting, pooling and separation are about the relationship between wholes and parts. (In writing this Essay, I learned that there is a whole sub-field in philosophy devoted to these questions—mereology—albeit at a very high level of abstraction.) Are we better off taking groups as givens, or trying to decompose them into individuals who can be treated differently once they’ve identified themselves? When will pooling or separation happen on their own, and when will some kind of intervention be required to generate the desired result? These questions will not always have crisp answers, but the analytic frame of pooling and separation can at least highlight some of the important issues that are at play.

II. An Example from Insurance: Why Pooling May Enhance Welfare

In retrospect, it is not surprising that the notions of pooling and separation originated in insurance economics, since insurers have intuitively understood for centuries that a functioning market for their product requires the pooling of risks. Here’s a simple illustration.

Suppose we have 1,000 otherwise identical people living in the town of Springfield. Assume that a random 5 percent of the population (50 people) will need a kidney transplant over the next year; but nobody knows which specific individuals will require this medical intervention, including the individuals themselves. A kidney transplant costs $200,000, which coincidentally happens to be the entire wealth of every individual. Springfielders are all risk averse, and have identical utility functions U = ⎷W (where W denotes their wealth). Given these assumptions, it is straightforward to show that the entire population will want to join a mutual insurance group that offers to cover anyone’s kidney transplant for a premium of (5%✕$200,000 =) $10,000, the actuarially fair amount. (We’re assuming for simplicity that insurance can be provided at no cost. Expected utility without insurance is 0.95✕⎷200,00 + 0.05✕⎷0 = 424.85. Utility with insurance is ⎷(200,000 – 10,000) = 435.89, so everyone will prefer full insurance at the fair premium to no insurance.) Everyone will buy insurance, and the risk of a loss of wealth will therefore be spread through the community as a whole. In a sense, this is pooling by default: since nobody knows who will get sick, there is no underlying dimension of heterogeneity, and no available information to separate out.

Now, suppose that some technological advance makes it possible to predict exactly who will need a new kidney and who will be healthy. It’s pretty easy to see that this knowledge will doom the insurance market. Those who are sure they will not need a transplant will not wish to buy insurance at any price—what’s the point of paying to cover a “risk” that won’t materialize? Those who know they will need a new kidney will be desperate to buy insurance, but of course no insurer will sell it to them at any price less than the fair premium. But the fair premium for a known-to-occur risk is just the full amount of the loss, here $200,000, which obviously provides no insurance value at all to the policyholders. There are now two groups, which have fully separated from each other, and as a result, the possibility of risk pooling is destroyed. Aggregate welfare is reduced: with fairly-priced full insurance for everyone, the community’s total expected utility was 1000✕⎷190,000 = 435,889. With no insurance, aggregate utility is 50✕0 + 950✕⎷200,000 = 424,853. (Note that under many scenarios, it will not matter whether insurers can require testing or instead are banned from doing so.)

The moral? Pooling was desirable, and the technological innovation that led to personalization destroyed the grounds for sharing risk by shredding the Rawlsian veil of ignorance that made insurance possible. (This insight originated with Jack Hirshleifer, who showed that in a pure exchange economy, “the community as a whole obtains no benefit . . . from either the acquisition or dissemination . . . of private foreknowledge” (emphasis in original). Put differently, predicting which particular person will be the victim of some harm (about which nothing can be done) is not socially useful because such a prediction is purely distributional information, with no efficiency consequences. Indeed, the information will be socially harmful if its revelation prevents productive risk spreading.)

III. An Example from Contract Law: Why Pooling May Be Inefficient

But before we get too enthusiastic about pooling, consider a different example from a pioneering article by Ian Ayres and Robert Gertner. (For a lucid exposition, see Douglas Baird.) Ayres and Gertner model the classic case of Hadley v. Baxendale, in which a mill owner sought damages from a carrier who failed to promptly return the mill’s shaft from the repair shop, as promised. Since the shaft was essential and the mill had no backup, it couldn’t operate during the time when the part was delayed, and it sought to recover its lost profits for that period.

In the Ayres and Gertner model, there are two types of mills: some have no backup shafts, and must remain closed while their shaft is being fixed, necessitating fast shipping; others do have a backup, and hence have no need for speed. Only the owner knows which type of mill he has, and he can choose to disclose that information to the carrier or not. (As is typical, Ayres and Gertner assume that the carrier knows the industry-wide prevalence of backups, so in the absence of any other information, it would charge the fair price (taking into account the possibility of damages, if it is liable) for the population as a whole.) Carriers, in turn, can choose a reliable (never-delayed, but more expensive) or risky (sometimes-delayed, but cheaper) mode of transportation. Crucially, Ayres and Gertner assume that most of the mills do have a backup shaft, meaning that unless told otherwise, the carrier has no reason to foresee that there will be substantial damages from delay.

Ayres and Gertner consider two alternative legal regimes, one in which carriers are only responsible for “foreseeable” damages from a late shaft, and one in which they are responsible for all such damages, whether foreseeable or not. Their model recognizes that both of these are just default rules: whatever the baseline, parties are free to contract for something different—enhanced damages in the first case, or limited damages in the second—if they prefer. But there is a transactional friction: it costs something to contract out of the default rule if the alternative is chosen instead.

The powerful conclusion of the Ayres and Gertner model is that there can be a profound difference in the equilibria under the Hadley rule (unforeseeable damages are not recoverable) as opposed to its opposite (all damages are recoverable, foreseeable or not). In their story, the Hadley rule leads the high-cost mills to self-identify so as to receive appropriate damages if something goes wrong; the lack of foreseeability of high damages is guaranteed by the assumption that most mills do have a backup shaft. In other words, it induces separation between the two types of mills. That is not the case under the anti-Hadley rule, however. There, the transactions costs are such that the many low-cost mills do not find it worthwhile to self-identify. Doing so lowers their shipping charges, but not by enough—given the rarity of high-cost mills—to overcome the transaction costs of contracting out of the default. Thus, the two types are pooled in equilibrium, not separated. (The authors are careful to note that this result is not robust—it depends on the size of the transactions costs and on the proportion of high- and low-damages mills in the population. Inefficient pooling—and its relationship to the foreseeability rule—is a possibility, not a certainty.)

In contrast to our insurance example, however, pooling reduces welfare in this context. There are two related reasons for this difference. In the insurance context, pooling is welfare enhancing because everyone is risk averse, and everyone benefits from insurance that is only possible if people are (equally) ignorant about the future. In the Hadley scenario, by contrast, pooling is inefficient because it conceals socially valuable private information—each mill’s vulnerability to harm if its shaft is delayed. This information is valuable because it allows for efficient reliance by the carrier: Expensive-but-reliable shipping is wasteful for mills that have a backup shaft and don’t need fast delivery, but it is appropriate for the few mills whose shafts absolutely, positively need to be there overnight. Cheaper-but-riskier shipping is efficient for most mills, but reduces contractual surplus for the few mills without backup shafts. But in order to appropriately tailor the type of shipping to the needs of its customer, the carrier has to know what kind of customer it has, and that private information belongs to the mill, which will choose whether to reveal it or not, depending on the background damages rule that governs late shipments and the costs of identifying itself.

These two stylized examples resonate with themes in Fennell’s book. For example, they illustrate that there is nothing natural or desirable about either pooling or splitting. By suitably regulating background conditions, society can decide (at least to some extent) how much pooling occurs and how much separation. Just as there’s nothing optimal about large land holdings or smaller ones, there’s nothing a priori desirable about pooling or separation. Sometimes (Nigerian scammers, insurance) pooling is preferrable; sometimes (efficient contracting, crime) separation is. Everything depends on what the information created by separation (or hidden by pooling) is being used for and how costly it is to obtain.

Even when the information has a socially valuable use, however, another important insight about pooling and separation is that the process of inducing separation (or pooling) will also matter. To see how this is so, let’s consider some examples from the world of crime and criminal law.

IV. Pooling, Separation, and Crime

Criminal law has been the locus of many creative attempts to generate separation between the guilty and the innocent. Peter Leeson shows how medieval trials by ordeal might actually have provided effective separation, given parties’ beliefs in the omnipresence of divine intervention in human affairs. Daniel J. Seidmann and Alex Stein argue that the Fifth Amendment protects the innocent (not the guilty), based on a somewhat similar rationale. (In their model, the right to silence encourages the guilty to remain silent, thereby separating them from the innocent, whose truthful testimony therefore becomes more credible precisely because they are not pooled with the guilty.)

As noted earlier in the context of drug couriers, those who have committed a crime typically know their own culpability. In order to avoid prosecution, therefore, criminals such as drug couriers attempt to pool with the (innocent) remainder of the population. Since criminals want to pool, we will need to impose some mechanism to induce separation if we want to apprehend them. We should probably stop to consider, however, whether the non-couriers have any incentive to signal that they are in fact not carrying drugs? Such a signal would require—as Spence first noted—some visible “marker” that is difficult to fake, in the sense that it is more costly for the guilty to acquire than it is for the innocent. Perhaps the Department of Homeland Security’s Trusted Traveler Program is an example of signaling by non-criminals, but it’s hard to think of many others.

One obvious possibility is simply to stop every car on the road and search it for drugs. That’s illegal, however:

It would be intolerable and unreasonable if [an officer] were authorized to stop every automobile on the chance of finding [contraband], and thus subject all persons lawfully using the highways to the inconvenience and indignity of such a search. . . . [T]hose lawfully within the country, entitled to use the public highways, have a right to free passage without interruption or search. Carroll v. United States at 153–54.

Moreover, a major drawback of this technique is that it requires a lot of costly searching of innocent drivers in order to find a small number of couriers. This kind of brute-force unpooling has little to recommend it, unless we think the benefits of catching the couriers are extremely high—say, if the couriers were terrorists carrying a bio-weapon.

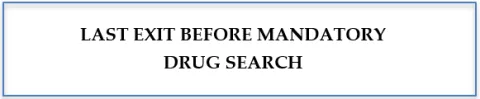

But there is an alternative screening mechanism in this context: a “ruse checkpoint.” To deploy this technique, police first choose a lightly used exit on a rural segment of a highway that they know is often used to transport drugs between two large cities. Just before the exit, they post a sign reading:

They then search every car that gets off at the exit. Of course, there is no mandatory drug search further down the road, which is what makes this a “ruse”—a term of art designating a deception in which the police do not disguise their presence, only their purpose or motive. Ruses are permissible under some circumstances. In one well-known case, police approached a suspect’s mother and gained entry into his house by telling her they had received a report of “‘a burglary or burglar alarm’ at that address.” The court was willing to admit the evidence uncovered pursuant to this ruse.

The law of ruse checkpoints is unsettled, however. Some courts have approved of them, holding that a driver’s evasive behavior in exiting a highway to avoid a ruse drug checkpoint supports a finding of reasonable suspicion that justifies a search. Others have held that evidence of drugs seized in a search that was triggered through a ruse checkpoint was inadmissible because the search was not sufficiently “individualized.”

Ruse checkpoints have unfortunately received scant scholarly attention, but as Kiel Brennan-Marquez and I suggest in an unpublished draft the ruse checkpoint technique is likely to be an effective way of separating at least some drug carriers from the innocent drivers on the highway with whom they are seeking to pool. A properly chosen rural exit will be unattractive to all but a few of the legitimate users of the road. And many criminals are naive or anxious or not thinking carefully, so the chance to get off the highway before a putative drug search will seem attractive to them. Indeed, under plausible assumptions, ruse checkpoints can be substantially more effective than other search techniques used by the police to find contraband, which typically have success rates in the range of 1 percent (That is, 1 in 100 of those stopped are carrying contraband.) The technique’s overall efficacy depends, among other things, on the proportion of all couriers who are naive or scared or impulsive enough to fall for the ruse. But for a remote exit suitably chosen to have few legitimate users, the hit rate (proportion of all those who exit who are carrying drugs) can be very high, even if most drug couriers are not fooled by the posted warning. We estimate that hit rates of 2 to 8 percent are plausible even if most criminals are not fooled by the ruse checkpoint; success rates would be substantially higher if criminals are easier to fool.

V. Fennellifying Pooling and Separation

One of the many virtues of Lee Fennell’s terrific book is the playful way it deconstructs and reconstructs seemingly familiar problems, showing how the analysis changes depending on one’s perspective. There are examples on virtually every page, but one of my favorites is the parable of the cricket ball and the fence (based on the English case of Bolton v. Stone), which perfectly illustrates the insights to be obtained by thinking about cost-justified precautions in a discrete (some fence, or none-at-all) vs continuous (any height of fence is possible) fashion.

In that spirit, I would like to try to complexify the analysis of pooling and separation, in two related ways.

A. Partial Separation

Return to the vegetarian/meat-eater story with which we began, and notice that there is an important difference between the two groups: vegetarians define themselves as people who don’t eat meat; but meat-eaters typically do not define themselves as exclusive carnivores. (Of course, the internet reveals that there are actually some exclusive carnivores, at least if you can trust a website called treehugger.com.)

In fact, the relevant distinction is really between vegetarians and omnivores. In turn, that means that we cannot guarantee that the choice of steak or tofu will perfectly separate the two groups, because on any given day, some omnivores might choose tofu, even if none of the vegetarians choose beef. This equilibrium is therefore only partially or incompletely separating.

For many purposes, the fact that we cannot achieve full separation won’t matter much. After all, the purpose of offering a choice of meals is to properly match attendees with their preferred food, so if some of the omnivores choose to eat tofu, who really cares?

But in other contexts, the degree and nature of partial separation can be crucial. With ruse checkpoints, for example, we care about the degree of homogeneity within both the exiters and the non-exiters. First, we have to acknowledge that the ruse checkpoint will only trap some of the couriers—those who are naive or panicky enough to fall for the trick. If the drug carriers are all sober and foresightful, the checkpoint won’t separate any of them from the rest of the population and will therefore be useless. As it stands, the checkpoint will only fool the least sophisticated of the criminals, and we can only hope that there are enough of them to make the operation worthwhile.

But the second dimension of partial separation is even more important. The checkpoint doesn’t only attract guilty couriers trying (ineffectually) to avoid capture by the police; it also traps legitimate users of the exit. These would include local residents, as well as people who are not carrying drugs but are just trying to avoid the hassle of a police checkpoint. Any burden placed on innocent travellers is obviously a cost that needs to be reckoned with in assessing the utility of the ruse checkpoint scheme. (Indeed, these considerations are what motivate choosing a lightly used exit in the first place.)

Sometimes partial separation is good enough. If the right to silence induces some of the guilty not to testify on their own behalf (removing them from the pool of testifiers and thereby strengthening the reliability of those who do proclaim their innocence), that’s probably welfare enhancing, although our answer will naturally depend on how we value wrongful acquittals versus wrongful convictions. But in general, we will almost always need to worry about whether the sorting mechanism we devise is powerful enough to cleanly “carve nature at the joints,”so that the groups it produces are sufficiently homogenous to be useful. Perfect separation will often be unattainable, and less than perfect separation has important costs that must be reckoned with.

B. Multidimensional Selection

So far, we have implicitly assumed that there are only two groups to be separated—the guilty and the innocent; the omnivores and the vegetarians; the healthier and the less healthy. Even with only two discrete groups, it will not always be possible to perfectly distinguish them. But it is obviously naive to imagine that life can be ordered along a single dimension.

In studying insurance markets, for example, most of the analysis of pooling and separation has taken place using models that expressly assume that the only relevant dimension of heterogeneity in the population is riskiness—some individuals have higher and some lower probabilities of loss. But it is obvious that an individual’s demand for insurance depends not only on her riskiness but also on the degree to which she is averse to risk, which varies widely across individuals.

Unfortunately, once one allows for heterogeneity in risk aversion—and especially if risk aversion can be correlated with riskiness—essentially all of the standard predictions about pooling and separation break down. As Abraham and Chiappori write, “the abstract theory of bidimensional adverse selection is extremely difficult, and little is known of the exact form of the resulting equilibrium. . . “ Kenneth S. Abraham & Pierre-Andre Chiappori, Classification Risk and Its Regulation, in D. Schwarcz and P. Siegelman, eds. Research Handbook on the Economics of Insurance Law (2015) at 316. (To see how hairy things can get, see here or sources discussed here.)

To see how multidimensional selection can work, consider David Hemenway’s insight into what he termed “Propitious Selection.” Hemenway noticed that across a variety of settings, those with insurance tend to be less risky than those who are uninsured. For example, motorcyclists without helmets are less likely to carry insurance than the general population (despite higher risks and identical premiums). Of course, this is the exact opposite of what is predicted by standard models of adverse selection, in which insurance is most attractive to (those who know they are) the riskiest. What seems to be going on here, and in several other contexts, is that the most risk averse are also the most careful. They value insurance not because they know they will need it, but because they find uncertainty to be so psychologically painful that, even though they aren’t likely to experience a loss, the mere possibility leaves them looking for a financial backstop.

Selection on both risk and risk aversion may completely upend some of the standard conclusions about how insurance markets work. Just such a negative relationship between insurance purchase and riskiness has been documented in a number of studies (discussed here) although many empirical investigations also find the standard positive correlation between insurance purchase and riskiness that is indicative of adverse selection. And the implications are far-reaching. The entire nature of the insurance market equilibrium (if there even is one) depends on how selection occurs, and there seems to be neither a theoretical nor an empirical consensus on how it works.

Conclusion

Like lumping and splitting, pooling and separation are ubiquitous in life and in the law. Properly managing the degree of aggregation is very tricky, to say the least. Sometimes, law requires the suppression of individualizing information and supports pooling, as when employers are banned from considering gender in assessing contributions for defined benefit pension plans, or insurers are prohibited from any use of gender in pricing. (As Liran Einav & Amy Finkelstein point out, the welfare effects of a ban on gendered pricing are uncertain on a theoretical level, and depend on the degree to which “individuals have some residual private information about their risk that is not captured by their gender,” as well as a variety of other empirical complications.) Sometimes, the law encourages separation and information revelation. While there is no general theory about which is preferable, I hope I’ve shown that there are useful insights to be had from seeing the world through this analytic lens, which I see as complementary to the themes in Lee Fennell’s book.