Experimental Methods in Constitutional Law

Most constitutional law and international human rights scholarship is doctrinal in nature, and if it is empirical, it is mostly qualitative. Less than 2% of the scholarship is quantitative, and only roughly 45% of quantitative research tries to establish causal relationships, of which experimental methods research only comprises about one third. Within research employing experimental methods, 90% are survey experiments. The rest are abstract lab and internet experiments and RCTs. In this Essay, we discuss the role of experiments in constitutional law scholarship and the relative advantage of different experimental methods. We conclude that although there is widespread skepticism about abstract lab experiments, they play a distinctive role in theory development and are complementary to the more widely used vignette or survey experiments.

I. Introduction

Aright to legal representation has recently been introduced in some Chinese provinces but not in others. At a recent conference on empirical legal studies held in Chengdu, a Chinese public law scholar presented evidence from a machine learning study that the right to legal representation might have hurt defendants. This conclusion seems very counterintuitive, and the a priori case that legal representation could hurt defendants seems rather weak. Yet, if pressed, we could come up with potential reasons why this might be the case: Prosecutors and judges might resent defense lawyers and treat those represented by a lawyer more harshly. Judges might take the fact that somebody hires a lawyer as a signal of guilt. The Chinese scholar’s study did not settle the question. Most importantly, it did not control for selection effects, namely the problem that defendants who were in higher legal jeopardy might have hired a lawyer while those who knew they had nothing to fear thought it unnecessary. Even without assuming a signaling effect, it is easy to see why we would observe harsher sentences for the pool of represented defendants with selection.

One of the senior scholars in the audience was also quick to give the presenter a thorough smackdown, saying in no uncertain terms that the result was so absurd that the only conclusion could be that his fancy method was wrong. But this is, of course, a highly problematic way of looking at things. Sometimes the truth is counterintuitive and, moreover, such counterintuitive truth is usually the most useful, as it inspires non-obvious reform or policies. In the case of legal representation for criminal offenses, such an insight, for example, would militate in favor of making legal representation mandatory in order to shut down the signaling channel and the negative reciprocity by judges who might feel provoked by a given defendant’s decision to seek representation.

So, how do we find the answer to this question? Ideally, we would take all people arrested for, say, theft in a particular court district in which no such representation rights yet exist. We could then randomly assign a lawyer to some of them and compare the outcomes from the treated group with those from the untreated group.

The proposed design would be a randomized controlled trial (RCT), which is often considered the gold standard of empirical scholarship. There are clearly big advantages to this method, the main one being that one can establish causality without any fancy assumptions. There is a treatment group and a control group. If the outcome metric differs, it must be due to treatment.

However, running RCTs is not always feasible. Moreover, even if an RCT were feasible, its results would not necessarily tell us much beyond the particular setting we are studying. The RCT method is particularly strong when we set out to study practically important questions like the one in our leading example. However, it is not as valuable for theory development. Especially, it is not very well-suited to study mechanisms leading to aggregate effects. Take Netta Barak-Corren’s important field experiment in which she shows that the U.S. Supreme Court’s decision in Masterpiece Cakeshop v. Colorado Civil Rights Commission (2018) has influenced wedding service vendors’ willingness to discriminate against same-sex couples. Does that apply to every court decision? Or just those that trigger polarized media coverage? If so, might the media coverage by itself create the same effect? And, even if the court decision causes the change in behavior, does it work on an individual level or through the community? These questions cannot easily be answered in a field study because the researcher must work with the world as it is, and not with the world that the researcher would like to construct to study the question at hand. In such a case, we need lab experiments, which give us a higher degree of control.

In Part II of this short Essay, we will first present an overview of the main methodological approaches in existing constitutional law and international human rights scholarship, their relative importance, and how they have evolved over time. In Part III, we will then discuss the advantages of abstract lab experiments and will discuss a research question that we believe yields itself to such an analysis. We also explain how to address external validity concerns. Part IV concludes.

II. Research Methods in Constitutional Law

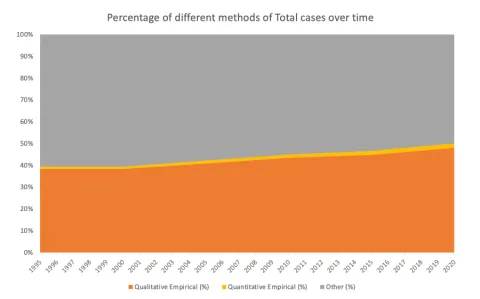

In this Part, we show the results from a study in which we used a bag-of-words methodology on the corpus of articles contained in the HeinOnline Repository to find out which methods are used in constitutional and international public law scholarship. Not surprisingly, we find that more than 50% of constitutional law scholarship is doctrinal or theoretical (Figure 1). Over the past twenty-five years, this percentage fell from around 60% to roughly 50%.

Figure 1: Constitutional Law Scholarship

The rest is empirical, but most of the empirical research is qualitative, as in case studies and interviews. Only a tiny sliver—less than 2% of the scholarship—is quantitative empirical research.

This is, of course, what the new book by Adam Chilton and Mila Versteeg is about, and in future years we can analyze the advent of this path-breaking book in an event study to examine whether it was able to have a measurable impact on the trajectory of the field.

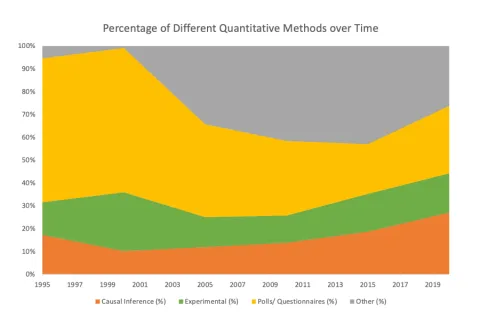

Figure 2 shows that within quantitative constitutional law scholarship, methods trying to get at causality (causal inference and experimental) are on the rise, but they so far only account for around 45% of quantitative empirical studies.

Figure 2 : Quantitative Constitutional Law Scholarship

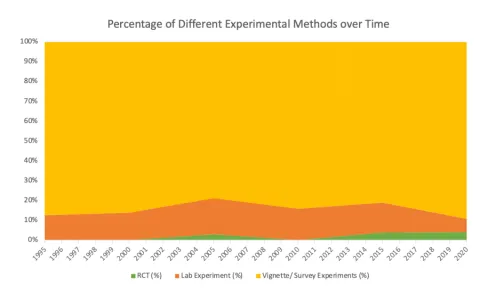

Within experimental scholarship in constitutional law, Figure 3 shows that almost all is accounted for by vignette and survey experiments. This is also the experimental methodology employed in the book by Chilton and Versteeg (alongside the large-N statistical analysis and case studies), and in Cope and Crabtree’s work on the degree of American citizens’ support, or lack thereof, for separating migrant families at the U.S. border. Only about 10% of experimental papers are either RCTs or studies that prominently report the results from more abstract lab or online experiments.1

Figure 3: Experimental Constitutional Law Scholarship

Abstract experiments are experiments that confront subjects with stripped-down scenarios that let them make decisions in an artificial and abstract world in which their choices have payoff consequences. Unlike the design of vignette studies, the experimental design of abstract studies does not provide rich descriptions of scenarios in which actual decisionmakers make their decisions.

III. Abstract Experiments

There are many reasons to doubt that anything useful can be learnt from abstract lab experiments to answer questions relevant to constitutional law. After all, these experiments are best at identifying regularities in individual human behavior. But often what we want to study in constitutional law is the result of collective action. Moreover, human decision-making is embedded in institutions, and these institutions interact with, frame, and potentially debias human decision-making. This is particularly relevant in the constitutional context, where institutions tend to be “thick” in the sense that they support shared values, traditions, and a common history.

So, in this short Essay we face the daunting task of defending the value of a methodology in constitutional law that is rarely used and against which very good arguments can be and have been raised.

One reason to be interested in individual decision making is that often the ultimate decision we want to study is indeed an individual decision, as when police officers on the ground decide whether to torture a suspect or when supervisors decide on whether to turn a blind eye to a violation of a right. There is also an old methodological idea in the social sciences: as every social phenomenon arises out of individuals’ interactions, every theory suggesting a particular behavioral response in the aggregate must at least be capable of rationalization on the individual level.

We now discuss, as an example, a research question that would be difficult to study with field data and for which RCTs are not feasible.

There is a modern trend to have an increasing number of aspirational rights in constitutions. A low-income country might have the right to universal, free health care. This right might exist alongside the right not to be detained without trial or not to be tortured. Observational data suggests an inverse relationship between the number of aspirational rights and the human rights record of the respective country. However, is there a causal relationship? Or is it just that those countries that have a shorter constitutional tradition tend to have more constitutionally protected rights (because it’s a modern trend) and a worse human rights record (because of the lack of a long constitutional tradition)?

It is clear that an RCT to answer this question is unavailable, yet the question is highly relevant. In principle, two methods are available to study this question: vignette studies that describe specific scenarios and abstract experiments. We claim that those two methods, which often pit economists (advocating abstract experiments) against psychologists and political scientists (advocating vignette studies), are actually complimentary.

A vignette design, for example, would give subjects a stylized catalogue of constitutionally protected rights and would then ask participants what they would do under an aspirational or a realistic bill of rights. It becomes immediately clear that recruiting students or participants on mTurk and Prolific and asking them to imagine they are parliamentarians, civil society leaders, or Supreme Court justices (or, at least, Supreme Court clerks) will not produce results with much external validity, which is presumably the great strength of the vignette method. This method is most convincing when people who are making the sort of decisions we are interested in studying in real life are confronted with a typical scenario they would encounter in exercising their function.

Asking a representative sample of adults and describing a rich scenario to them is a good design if we wish to study how particular factors affect people’s voting behavior or when we are interested in studying decisions by juries, which are made up of randomly selected laypeople. In our case, a relevant subject pool of actual decision-makers might, for example, be civil society leaders whom we could ask about their willingness to organize a protest, or parliamentarians whom we could ask about their likely voting behavior.

For example, we could describe a hypothetical country, which has an authoritarian past but successfully transitioned to democracy two decades ago. This country could now be facing the risk of secession of a province inhabited by an ethnic minority, and tensions have led to largely peaceful protests. However, the government is concerned the separatist tendencies will grow unless it takes a decisive stance against the leaders of the separatist movement. It therefore introduces a bill in parliament that allows detaining “internal terrorists” without trial for up to twelve months. Most constitutional lawyers consider this law unconstitutional. We could then elicit parliamentarians’ willingness to vote for this law in two scenarios: one where a hypothetical bill of rights contains many aspirational rights and another where the bill of rights only recognizes traditional, first-generation civil liberties. Yet even this design has a serious problem: it is very likely that parliamentarians would vote depending on their intuitive sense of the de facto constitutional order in their country. Their attitudes and views on this matter would be very difficult to manipulate by a mere experimental treatment.

An abstract design as in Leibovitch and Stremitzer (2021) uses a different strategy: it lets participants perform abstract, real-effort tasks, presented to them without any particular framing that situates them in some special context. We simply describe to participants what they need to know in order to perform the task, e.g., transcribing letters from ancient Greek into the Latin alphabet using a transliteration table. The treatments are that participants are confronted with a set of goals: In one treatment, one of these goals is overly ambitious, while in the other treatment the goal on that same dimension is realistic. All the other goals are identical. We can then study whether there is a treatment effect on effort of participants on the task. In this design, participants play an actual game with real payoff consequences. They get paid a fixed wage with the benefit of their effort going to third parties. This incentive structure tracks that of civil servants. But no mention is made of any particular decision problem or of any institutional detail. In this sense, the design is completely abstract. Finding results consistent with the inverse relationship between the ambition of the goal and the actual effort in such a setting would establish on a micro level that the hypothesized effect arises in such abstract settings.

At first glance, it might seem a bit of a stretch to claim that such a result tells us something meaningful about the effects of real constitutions. We disagree. We suppose that skeptics’ view is the consequence of two misperceptions. The first misperception is that experimentalists are claiming that they have found conclusive and dispositive proof of an effect through abstract experiments. This is not the case. Lab experiments are more like rational choice models that establish a possibility result. Thus, this criticism is largely a reaction to perceived over-claiming. The second misconception arises from a form of binary thinking that considers every weakness of any method fatal, when, indeed, every method has its unique strengths and weaknesses.

Imagine that we come up with a good theory, show observational data that is consistent with this theory, run an experiment establishing that human behavior follows the theoretical predictions in an abstract setting and disentangling the mechanism, and finally, conduct an array of vignettes to close the external validity gap. Then we would surmise that all this evidence, taken together, makes for a compelling argument.

To use a metaphor: theory usually asks whether a consistent story can be told which would produce the stipulated effect. Something that cannot exist in theory cannot exist in practice. Theory therefore answers whether an animal can exist. Abstract experiments answer the question whether the animal does exist in a zoo. It should be clear that existence in a zoo makes it much more likely the animal also exists in the wild. This question, whether it exits in a particular habitat, can be answered by observational studies—RCTs, when available—and, to a limited extent, by vignette studies, which help, albeit imperfectly, to bridge the gap between a general result and a particular situation in which we want to argue that the general phenomenon persists.

IV. Conclusion

Constitutional design requires making decisions about potentially consequential matters that influence the lives of many people. It is useful to have information about the effect of choices the designer might make on citizens, civil servants, judges, and policy makers. As we explained in this short Essay, RCTs are often unavailable, but we think that there is an important role for abstract experiments in theory-building in the realm of constitutional law. Those experiments should be complemented by vignette studies that close the external validity gap and confront the relevant actors with realistic decision scenarios.

- 1Our bag-of-words methodology likely overestimates the number of abstract experiments in constitutional law scholarship by counting many articles that just contain very detailed references to experimental studies, rather than conducting original experimental research. A hand-count conducted by the authors suggests the number of such articles might be about one per year in absolute numbers.